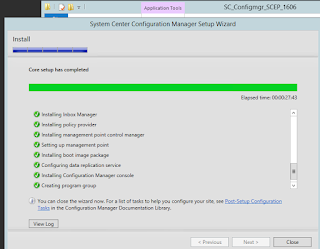

OK that's not a big deal for anyone but for me it was a three day long battle with lots of dead-ended installs, undo's and redo's. So, at long last this is the famous screen I wanted to see so much! All green! /me happy now, thanks Prajwal Desai

2016. október 19., szerda

2016. szeptember 19., hétfő

Connect your Jira instance to a HipChat

Last year I got the chance to manage an Atlassian Jira and Confluence server. That was fun so far. But last week I was given a new task: fire up a HipChat instance and connect it with Jira. I wasted some days figuring out what to do with that exactly so to anyone getting here with Google: you are so lucky that I can tell you everything that you never find in any Atlassian docs. Here are the steps I have done.

1: download your HipChat VM instance and import it to a Vmware host. (Change RAM, NIC etc. settings according your needs.)

2: Start, login with admin / hipchat into your console (to su, type: sudo /bin/dont-blame-hipchat)

3: Set your fix IP networking with such a command: hipchat network -m static -i 192.168.100.20 -s 255.255.255.0 -g 192.168.100.254 -r 192.168.100.254

4: Open your /etc/hosts for edit and enter: 192.168.100.20 hipchat hipchat.mynetwork.local

5: In your nameserver set a new record for hipchat, e.g. hipchat.mynetwork.local (192.168.100.20)

6/a: generate a self signed SSL certificate

6/b: request a certificate from an external cert provider (see below *)

7: Finish your HC install using your (trial) licence and this certificate. (Certificate and hostname can be changed later)

8: Install HipChat connect Add-On in your Jira

9: Here comes the tricky part that drove me nuts. One can't simply force Jira connect to Hipchat because of Java engine in Jira won't trust HipChat's cert by default. You will notice that if you check catalina.out logfile in Jira: cat /opt/atlassian/jira/logs/catalina.out :

So you have two choices.

First: manually add your cert to the trusted java store. Get your server public key, detailed here. Once got your pub key into a file, execute this command: (check your paths ofcoz')

Second method: install SSL for Jira add-on. It's easier.

See attached srceenshot: it assists you installing the server cert. It creates an updated but temporary java keystore file and you have to copy it in place of the production keystore later and then restart the whole Jira.

10. Success ! (almost..)

* 7/b: in this case you'll need an external FQDN so have to own a domain name. So for example if you own mighty.org domain name, do the following:

- create a CSR for hipchat.mighty.org with your favorite linux home system.

- request a trusted certificate at a trusted 3rd party cert provider for hipchat.mighty.org

- in your INTERNAL(!) nameserver, create a new zone called hipchat.mighty.org and assing 192.168.100.20 to its @ value.

1: download your HipChat VM instance and import it to a Vmware host. (Change RAM, NIC etc. settings according your needs.)

2: Start, login with admin / hipchat into your console (to su, type: sudo /bin/dont-blame-hipchat)

3: Set your fix IP networking with such a command: hipchat network -m static -i 192.168.100.20 -s 255.255.255.0 -g 192.168.100.254 -r 192.168.100.254

4: Open your /etc/hosts for edit and enter: 192.168.100.20 hipchat hipchat.mynetwork.local

5: In your nameserver set a new record for hipchat, e.g. hipchat.mynetwork.local (192.168.100.20)

6/a: generate a self signed SSL certificate

6/b: request a certificate from an external cert provider (see below *)

7: Finish your HC install using your (trial) licence and this certificate. (Certificate and hostname can be changed later)

8: Install HipChat connect Add-On in your Jira

9: Here comes the tricky part that drove me nuts. One can't simply force Jira connect to Hipchat because of Java engine in Jira won't trust HipChat's cert by default. You will notice that if you check catalina.out logfile in Jira: cat /opt/atlassian/jira/logs/catalina.out :

/rest/hipchat/integration/latest/installation/complete [c.a.p.hipchat.rest.HipChatLinkResource] javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

So you have two choices.

First: manually add your cert to the trusted java store. Get your server public key, detailed here. Once got your pub key into a file, execute this command: (check your paths ofcoz')

/opt/atlassian/jira/jre/bin/keytool -import -alias hipchat.mighty.org -keystore /opt/atlassian/jira/jre/lib/security/cacerts -file /certs/mypubhipchat.crtIt asks you for a password. What the heck, what kind of password, you might ask! That is the default password for Java cert storage and hopefully nobody changed it in your system, so enter: changeit for password.

Second method: install SSL for Jira add-on. It's easier.

See attached srceenshot: it assists you installing the server cert. It creates an updated but temporary java keystore file and you have to copy it in place of the production keystore later and then restart the whole Jira.

10. Success ! (almost..)

* 7/b: in this case you'll need an external FQDN so have to own a domain name. So for example if you own mighty.org domain name, do the following:

- create a CSR for hipchat.mighty.org with your favorite linux home system.

- request a trusted certificate at a trusted 3rd party cert provider for hipchat.mighty.org

- in your INTERNAL(!) nameserver, create a new zone called hipchat.mighty.org and assing 192.168.100.20 to its @ value.

2016. július 26., kedd

Howto setup Icinga2 and Icingaweb on CentOS

On your newly installed CentOS server:

# this is my network setup for my own usage, won't fit yours :)

cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE="Ethernet"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

NAME="eth0"

UUID="2ef9cace-1428-4dbf-aac7-7993463c359a"

DEVICE="eth0"

ONBOOT="yes"

IPADDR=192.168.183.235

NETMASK=255.255.254.0

NETWORKING=yes

HOSTNAME=s1

GATEWAY=192.168.183.254

NM_CONTROLLED=no

yum -y install deltarpm yum -y install wget net-tools bind-utils gcc mc

setenforce 0 # :( mcedit /etc/selinux/config >> change enabled to SELINUX=disabled or SELINUX=permissive yum -y update && yum -y upgrade yum install -y epel-release rpm --import http://packages.icinga.org/icinga.key wget http://packages.icinga.org/epel/ICINGA-release.repo -O /etc/yum.repos.d/ICINGA-release.repo yum makecache yum install -y nagios-plugins-all icinga2 icinga2-ido-mysql icinga-idoutils-libdbi-mysql yum install -y httpd php-cli php-pear php-xmlrpc php-xsl php-pdo php-soap php-gd php-ldap mcedit /etc/php.ini >> set date.timezone = Europe/YOURZONE systemctl enable httpd && systemctl start httpd yum install -y mariadb-server systemctl start mariadb systemctl enable mariadb netstat -nlp | grep 3306 #(check if it runs) mysql -u root > use mysql; > update user set password=PASSWORD("root_password") where User='root'; > flush privileges; > exit systemctl restart mariadb mysql -u root -p >CREATE DATABASE icinga2; >GRANT SELECT, INSERT, UPDATE, DELETE, DROP, CREATE VIEW, INDEX, EXECUTE ON icinga2.* TO 'icinga2'@'localhost' IDENTIFIED BY 'icinga2_password'; >flush privileges; >exit mysql -u root -p icinga2 < /usr/share/icinga2-ido-mysql/schema/mysql.sql mcedit /etc/icinga2/features-available/ido-mysql.conf >> change: user = "icinga2" >> password = "icinga2_password" >> host = "localhost" >> database = "icinga2" systemctl enable icinga2 && systemctl start icinga2 tail -f /var/log/icinga2/icinga2.log #(check if it runs) icinga2 feature enable command icinga2 feature list # (to check) systemctl restart icinga2 yum -y install icingaweb2 icingacli grep icingaweb2 /etc/group #check if it's icingaweb2:x:990:apache touch /var/www/html/index.html chown apache /var/www/html/index.html icingacli setup config directory --group icingaweb2 icingacli setup token create # get the token to the clipboard icingacli setup token show # in case you missed it systemctl restart httpd # open a browser and type the IP address or FQDN of your server. That will be icinga.infokom.local for my case. #next, next, you should see everything green

>authentication : database

>Database type: MySQL

>Host: localhost

>Database name: icingaweb2

>Username: myself

>Password: *********

>Character set: utf8

#rest of the web based setup detailed here with screenshots:

#

#Now it's time to add your first node to your server.

#On the server, run:

icinga2 node wizard Welcome to the Icinga 2 Setup Wizard! We'll guide you through all required configuration details. Please specify if this is a satellite setup ('n' installs a master setup) [Y/n]: n Starting the Master setup routine... Please specifiy the common name (CN) [icinga.infokom.local]: Press Enter Checking for existing certificates for common name 'icinga.infokom.local'... Certificates not yet generated. Running 'api setup' now. information/cli: Generating new CA. information/base: Writing private key to '/var/lib/icinga2/ca/ca.key'. information/base: Writing X509 certificate to '/var/lib/icinga2/ca/ca.crt'. information/cli: Generating new CSR in '/etc/icinga2/pki/icinga.infokom.local.csr'. information/base: Writing private key to '/etc/icinga2/pki/icinga.infokom.local.key'. information/base: Writing certificate signing request to '/etc/icinga2/pki/icinga.infokom.local.csr'. information/cli: Signing CSR with CA and writing certificate to '/etc/icinga2/pki/icinga.infokom.local.crt'. information/cli: Copying CA certificate to '/etc/icinga2/pki/ca.crt'. Generating master configuration for Icinga 2. information/cli: Adding new ApiUser 'root' in '/etc/icinga2/conf.d/api-users.conf'. information/cli: Enabling the 'api' feature. Enabling feature api. Make sure to restart Icinga 2 for these changes to take effect. information/cli: Dumping config items to file '/etc/icinga2/zones.conf'. information/cli: Created backup file '/etc/icinga2/zones.conf.orig'. Please specify the API bind host/port (optional):Press Enter Bind Host []: Press Enter Bind Port []: Press Enter information/cli: Created backup file '/etc/icinga2/features-available/api.conf.orig'. information/cli: Updating constants.conf. information/cli: Created backup file '/etc/icinga2/constants.conf.orig'. information/cli: Updating constants file '/etc/icinga2/constants.conf'. information/cli: Updating constants file '/etc/icinga2/constants.conf'. information/cli: Updating constants file '/etc/icinga2/constants.conf'. Done.

# check the output if it's OK

egrep 'NodeName|TicketSalt' /etc/icinga2/constants.conf

mcedit /etc/icinga2/zones.conf

# change the string NodeName to your FQDN, in my case:

cat /etc/icinga2/zones.conf

object Endpoint "icinga.infokom.local" {

}

object Zone ZoneName {

endpoints = [ "icinga.infokom.local" ]

}

systemctl restart icinga2.service

# to add my first client server named s2 i need a token

icinga2 pki ticket --cn 's2.infokom.local'

# On the client server:

yum install -y epel-release rpm --import http://packages.icinga.org/icinga.key wget http://packages.icinga.org/epel/ICINGA-release.repo -O /etc/yum.repos.d/ICINGA-release.repo yum makecache

yum install icinga2 mc

setenforce 0 # :(

mcedit /etc/selinux/config >> change enabled to SELINUX=disabled or SELINUX=permissive

icinga2 node wizard Welcome to the Icinga 2 Setup Wizard! We'll guide you through all required configuration details. Please specify if this is a satellite setup ('n' installs a master setup) [Y/n]:Enter Starting the Node setup routine... Please specifiy the common name (CN) [s2.infokom.local]: Enter Please specifiy the local zone name [s2.infokom.local]: Enter Please specify the master endpoint(s) this node should connect to:Enter Master Common Name (CN from your master setup): icinga.infokom.local Do you want to establish a connection to the master from this node? [Y/n]: y Please fill out the master connection information:Enter Master endpoint host (Your master's IP address or FQDN): 192.168.183.235 Master endpoint port [5665]: Enter Add more master endpoints? [y/N]: Enter Please specify the master connection for CSR auto-signing (defaults to master endpoint host):Enter Host [192.168.183.235]: Enter Port [5665]: Enter information/base: Writing private key to '/etc/icinga2/pki/s2.infokom.local.key'. information/base: Writing X509 certificate to '/etc/icinga2/pki/s2.infokom.local.crt'. information/cli: Generating self-signed certifiate: information/cli: Fetching public certificate from master (192.168.183.235, 5665): information/cli: Writing trusted certificate to file '/etc/icinga2/pki/trusted-master.crt'. information/cli: Stored trusted master certificate in '/etc/icinga2/pki/trusted-master.crt'. Please specify the request ticket generated on your Icinga 2 master. (Hint: # icinga2 pki ticket --cn 's2.infokom.local'): faaec3b98221622841cc437ee74b09a1f44b1ab information/cli: Processing self-signed certificate request. Ticket 'faaec3b98221622841cc437ee74b09a1f44b1ab'. information/cli: Created backup file '/etc/icinga2/pki/s2.infokom.local.crt.orig'. information/cli: Writing signed certificate to file '/etc/icinga2/pki/s2.infokom.local.crt'. information/cli: Writing CA certificate to file '/etc/icinga2/pki/ca.crt'. Please specify the API bind host/port (optional):Enter Bind Host []: Enter Bind Port []: Enter Accept config from master? [y/N]: y Accept commands from master? [y/N]: y information/cli: Disabling the Notification feature. Disabling feature notification. Make sure to restart Icinga 2 for these changes to take effect. information/cli: Enabling the Apilistener feature. Enabling feature api. Make sure to restart Icinga 2 for these changes to take effect. information/cli: Created backup file '/etc/icinga2/features-available/api.conf.orig'. information/cli: Generating local zones.conf. information/cli: Dumping config items to file '/etc/icinga2/zones.conf'. information/cli: Created backup file '/etc/icinga2/zones.conf.orig'. information/cli: Updating constants.conf. information/cli: Created backup file '/etc/icinga2/constants.conf.orig'. information/cli: Updating constants file '/etc/icinga2/constants.conf'. information/cli: Updating constants file '/etc/icinga2/constants.conf'. Done.

# to check

grep 's2' /etc/icinga2/constants.conf

mcedit /etc/icinga2/zones.conf

# change NodeName to your local machine name, in my case it's FQDN

mcedit /etc/icinga2/zones.conf

object Endpoint "icinga.infokom.local" {

host = "192.168.183.235"

port = "5665"

}

object Zone "master" {

endpoints = [ "icinga.infokom.local" ]

}

object Endpoint "s2.infokom.local" {

}

object Zone ZoneName {

endpoints = [ "s2.infokom.local" ]

parent = "master"

}

service icinga2 restart && service icinga2 enable

# wait a bit and back to the icinga server:

icinga2 node list

# you SHOULD see your client server NOW

Node 's2.infokom.local' (last seen: Wed Jul 27 09:36:11 2016)

* Host 's2.infokom.local'

* Service 'apt'

[...]

icinga2 node update-config

systemctl reload icinga2.service

Open your web GUI and see your new server, it's in PENDING state now. Wait a bit or click on CHECK NOW button in the

CHECK EXECUTION section.

2016. június 27., hétfő

File access auditing on a Windows fileserver: Data Leakage Prevention

Here is a clever script concept that helps company managers notifying someone's unusual amount of file reading. That's typical behaviour for an employee who is intended to quit and try to steal all the files of that company. Such auditing softwares are on the market for several hundred or thousand bucks!

Luckily for you, I've written one in bash. OK that's not good news for ones who use only Windows. But it can be easily portable to any script language, for example, php so that it could be run directly in the Windows fileserver or DC by installing the proper runtime enviroment. (PHP, ruby, python, etc.)

Exploring that thought further, now I'm going to translate that for myself. ;) But for now, it's enough to get it work in bash.

The original idea is that we suppose that all the users open almost the same amount of files daily on their daily routines. This script always alerts when a statistical threshold percent reached per user.

In the following example you are going to see a nice solution for lab use in which I transfer the logfile from the Windows server to a Linux server to be able to run the bash script on it. You can find detailed comments inside the script.

Step-by-step installation:

1: Enable audit log policy on your Windows Server, assign it to the target folders and test it

(Note: in the above blog you can find an advanced example. In my case I look for event id 4663 because it just contains the information I need.) Set the audit rules according to your needs. The less eventrule the better. We need to trace file reads so the first rule is a must.

2: You need to export the specific events from the security log to a plain file. So create a getsec.ps1 file in c:\script\ with the following content:

Get-EventLog security -After (Get-Date).AddDays(-1) -InstanceId 4663 |select Message|ft -AutoSize -Wrap > c:\auditing\report.txt

3: Also, don't forget to create that c:\auditing folder and then put an empty file into it named: mounted

4: Schedule the script to run at the end of the working hours or at midnight. The command is to be: (e.g.) C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe and the argument (e.g.): -executionpolicy bypass -file c:\scripts\getsec.ps1 2>&1 > C:\scripts\log.txt

5: Share c:\auditing folder with a dedicated user that is intended to be used only by the Linux server, e.g.: linuxsrv

6: On your linux box, install the following packages: cifs-utils dos2unix mutt iconv

7: Test your connection:

[ -f /mnt/mounted ] || mount.cifs //192.168.xx.xx/auditing/ /mnt/ -o username=linuxsrv,password=Sup3rS3cur3P4$$,domain=contoso

8: Create the base directories in, e.g.

mkdir /root/auditor && cd /root/auditor

mkdir archive average stat users; echo "0" > counter

Having succeeded, congratulations, now you are ready to track your file access activity and watch out for possible data stealing FOR FREE!

Here is the mighty script. See comments inline!

Luckily for you, I've written one in bash. OK that's not good news for ones who use only Windows. But it can be easily portable to any script language, for example, php so that it could be run directly in the Windows fileserver or DC by installing the proper runtime enviroment. (PHP, ruby, python, etc.)

Exploring that thought further, now I'm going to translate that for myself. ;) But for now, it's enough to get it work in bash.

The original idea is that we suppose that all the users open almost the same amount of files daily on their daily routines. This script always alerts when a statistical threshold percent reached per user.

In the following example you are going to see a nice solution for lab use in which I transfer the logfile from the Windows server to a Linux server to be able to run the bash script on it. You can find detailed comments inside the script.

Step-by-step installation:

1: Enable audit log policy on your Windows Server, assign it to the target folders and test it

(Note: in the above blog you can find an advanced example. In my case I look for event id 4663 because it just contains the information I need.) Set the audit rules according to your needs. The less eventrule the better. We need to trace file reads so the first rule is a must.

2: You need to export the specific events from the security log to a plain file. So create a getsec.ps1 file in c:\script\ with the following content:

Get-EventLog security -After (Get-Date).AddDays(-1) -InstanceId 4663 |select Message|ft -AutoSize -Wrap > c:\auditing\report.txt

3: Also, don't forget to create that c:\auditing folder and then put an empty file into it named: mounted

4: Schedule the script to run at the end of the working hours or at midnight. The command is to be: (e.g.) C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe and the argument (e.g.): -executionpolicy bypass -file c:\scripts\getsec.ps1 2>&1 > C:\scripts\log.txt

5: Share c:\auditing folder with a dedicated user that is intended to be used only by the Linux server, e.g.: linuxsrv

6: On your linux box, install the following packages: cifs-utils dos2unix mutt iconv

7: Test your connection:

[ -f /mnt/mounted ] || mount.cifs //192.168.xx.xx/auditing/ /mnt/ -o username=linuxsrv,password=Sup3rS3cur3P4$$,domain=contoso

8: Create the base directories in, e.g.

mkdir /root/auditor && cd /root/auditor

mkdir archive average stat users; echo "0" > counter

Having succeeded, congratulations, now you are ready to track your file access activity and watch out for possible data stealing FOR FREE!

Here is the mighty script. See comments inline!

2016. június 21., kedd

More Powershell

The original idea was to ease the process of creating a new distribution group with one human member and an archive public folder regularly. These mail enabled security groups and public folders always get their names based on a company standard: Contoso GROUPNAME and Contoso_Groupname_Archive. The most exciting part of it is the waiting loop: we've got to make sure the the new group is created and replicated over the DCs in the domain before going on. Have to be run in an Exchange Shell.

Two minor notes: pfviewer is a special company group in which all the viewer right assigned users are. Jane.manager1 and john.manager2 are the company head managers.

Two minor notes: pfviewer is a special company group in which all the viewer right assigned users are. Jane.manager1 and john.manager2 are the company head managers.

Import-Module activedirectory

$ShName = Read-Host "Please specify the new groupname, e.g.: TechGroup1"

$Name = "Contoso "+$ShName

if (!(dsquery group -samid $Name)){ NEW-ADGroup -name $Name -groupscope 2 -path "OU=ContMailLists,DC=co,DC=local" }else{Write-Host "WARNING: ADGroup already exists. PRESS CTRL+C to exit or take the consequences."}

$DotName ="contoso."+$ShName

$EmailADD = $DotName+"@contoso.com"

$PFName = "Contoso_"+$ShName+"_Archiv"

$Ember = Read-Host "Specify the login name of the user going to be a member of this group. E.g.: john.smith"

$FullPFName = "\"+$PFName

$PFEmail = "contoso"+$ShName+"Archiv@contoso.com"

$IfGroupExists = Get-DistributionGroup -name $Name -ErrorAction 'SilentlyContinue'

if( $IfGroupExists)

{

$IFSTOP = Read-Host "This distribution group already exists! Press CTRL+C-t to exit"

}

Write-Host -NoNewline "Please wait a bit. Shouldn't take long"

Do

{

If($Idx -gt 0) {Start-sleep -s 2}

$r = Get-ADGroup -Filter {SamAccountName -eq $Name}

Write-Host -NoNewline "."

$Idx = $Idx + 1

}

Until($r)

Enable-DistributionGroup -Identity "CN=$Name,OU=ContMailLists,DC=wt,DC=local" -Alias $DotName

Set-DistributionGroup -Identity $Name -ManagedBy co.local\Admin -BypassSecurityGroupManagerCheck

Set-DistributionGroup -Identity $Name -RequireSenderAuthenticationEnabled 0 -PrimarySmtpAddress $EmailADD -WindowsEmailAddress $EmailADD -EmailAddressPolicyEnabled 0 -Alias $DotName -GrantSendOnBehalfTo jane.manager1, john.manager2, $Ember

New-PublicFolder -Name $PFName -Path \

Enable-MailPublicFolder -Identity $FullPFName -HiddenFromAddresslistsEnabled 1

Set-MailPublicFolder -Identity $FullPFName -EmailAddressPolicyEnabled 0

Set-MailPublicFolder -Identity $FullPFName -EmailAddresses $PFEmail

Add-PublicFolderClientPermission -Identity $FullPFName -accessrights ReadItems,CreateItems,FolderVisible -user pfviewer

Remove-PublicFolderClientPermission -Identity $FullPFName -accessrights ReadItems,EditOwnedItems,DeleteOwnedItems,FolderVisible -user default -Confirm:$false

Add-DistributionGroupMember -Identity $Name -member $PFName

Add-DistributionGroupMember -Identity $Name -member $Ember

2016. május 31., kedd

Docker minihowto

To start a new container. If does not exists locally, it downloads a stock one from dockerhub.

docker run -i -t centos:latest /bin/bash

(-i: interactive mode) (-t: runs centos image) (starts a command, here a shell)

List running docker containers: docker ps

List running docker containers + history : docker ps -a

List docker local images: docker images

Escape from a container and put that running one in the background: CTRL-P+CTRL-Q - or run it with -exec: docker exec -ti [CONTAINER-ID] bash

It starts a new process with bash shell, and you could escape from it by ^c directly, it won't affect the original process.

On the host find the docker virtual files (aufs), confs, etc. here: /var/lib/docker

See details about an image: docker inspect IMAGENAME(e.g. centos:latest)OR ITS_RANDOM_NAME | less

To build a new container: docker build -t MYIMAGENAME . (.=where my DOCKERFILE is)

an example DOCKERFILE content looks like:

docker run -d centos:latest -p 3000:3000 --name my-service (starts in the background) (maps hosts's port 3000 (on all interfaces) to container's service port 3000)

To enter inside a container with bash: docker exec -i -t my-service /bin/bash

Tag (set an alias name for) an image: docker tag IMAGE_ID (seen in the output of docker images) REPONAME:TAG (e.g. mydockeruser/myrepo:2)

Now see what you have tagged: docker images

Enter dockerhub with your dockerhub login: docker login

Push your new built image into your pub repository: docker push REPONAME:TAG

Remove an image from localhost repository: docker rmi IMAGE_ID (force with -f)

For example, to start a new mariadb instance:

docker run --name mariadb-1 -p 3306:3306 -e MYSQL_ROOT_PASSWORD=mypass -v /home/ubuntu/db/db1:/var/lib/mysql -d mariadb

(with -v you mount your localhost's folder into your container)(with -e you pass an environment variable to the container.

Passing a global variable, for example: docker run -i -t -e "WHOISTHEKING=me" ubuntu:14.04 /bin/bash -> echo $WHOISTHEKING)

Insert a file into the container directly from outside:

docker insert CONTAINERNAME http://ftp.drupal.org/files/projects/drupal-7.22.tar.gz /root/drupal.tar.gz

To commit your changes to the image: docker commit -m "commit message" -a "Your Name" IMAGENAME username/my-redis:latest TO BE CONTINUED

docker run -i -t centos:latest /bin/bash

(-i: interactive mode) (-t: runs centos image) (starts a command, here a shell)

List running docker containers: docker ps

List running docker containers + history : docker ps -a

List docker local images: docker images

Escape from a container and put that running one in the background: CTRL-P+CTRL-Q - or run it with -exec: docker exec -ti [CONTAINER-ID] bash

It starts a new process with bash shell, and you could escape from it by ^c directly, it won't affect the original process.

On the host find the docker virtual files (aufs), confs, etc. here: /var/lib/docker

See details about an image: docker inspect IMAGENAME(e.g. centos:latest)OR ITS_RANDOM_NAME | less

To build a new container: docker build -t MYIMAGENAME . (.=where my DOCKERFILE is)

an example DOCKERFILE content looks like:

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install -y wget

RUN apt-get install -y build-essential tcl8.5

RUN wget http://download.redis.io/releases/redis-stable.tar.gz

RUN tar xzf redis-stable.tar.gz

RUN cd redis-stable && make && make install

RUN ./redis-stable/utils/install_server.sh

EXPOSE 6379

ENTRYPOINT ["redis-server"] docker run -d centos:latest -p 3000:3000 --name my-service (starts in the background) (maps hosts's port 3000 (on all interfaces) to container's service port 3000)

To enter inside a container with bash: docker exec -i -t my-service /bin/bash

Tag (set an alias name for) an image: docker tag IMAGE_ID (seen in the output of docker images) REPONAME:TAG (e.g. mydockeruser/myrepo:2)

Now see what you have tagged: docker images

Enter dockerhub with your dockerhub login: docker login

Push your new built image into your pub repository: docker push REPONAME:TAG

Remove an image from localhost repository: docker rmi IMAGE_ID (force with -f)

For example, to start a new mariadb instance:

docker run --name mariadb-1 -p 3306:3306 -e MYSQL_ROOT_PASSWORD=mypass -v /home/ubuntu/db/db1:/var/lib/mysql -d mariadb

(with -v you mount your localhost's folder into your container)(with -e you pass an environment variable to the container.

Passing a global variable, for example: docker run -i -t -e "WHOISTHEKING=me" ubuntu:14.04 /bin/bash -> echo $WHOISTHEKING)

Insert a file into the container directly from outside:

docker insert CONTAINERNAME http://ftp.drupal.org/files/projects/drupal-7.22.tar.gz /root/drupal.tar.gz

To commit your changes to the image: docker commit -m "commit message" -a "Your Name" IMAGENAME username/my-redis:latest TO BE CONTINUED

2016. május 19., csütörtök

GlusterFS in a simple way

Here is the story how I managed to install a 2 node glusterfs on CentOS and one client for test purposes.

In my case the hostnames and the IPs were:

192.168.183.235 s1

192.168.183.236 s2

192.168.183.237 c1

Append these to the end of /etc/hosts to make sure that simple name resolution will work.

Execute the followings on both servers.

rpm -ivh http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm

wget -P /etc/yum.repos.d http://download.gluster.org/pub/gluster/glusterfs/3.7/3.7.5/CentOS/glusterfs-epel.repo

yum -y install glusterfs glusterfs-fuse glusterfs-server

It's no need to install any of samba packages if you don't intend to use smb.

systemctl enable glusterd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/glusterd.service to /usr/lib/systemd/system/glusterd.service.

Both servers had a second 20G capacity disk named sdb. I created two LV's for two bricks.

[root@s2 ~]# lvcreate -L 9G -n brick2 glustervg

Logical volume "brick2" created.

[root@s2 ~]# lvcreate -L 9G -n brick1 glustervg

Logical volume "brick1" created.

[root@s1 ~]# vgcreate glustervg /dev/sdb

Volume group "glustervg" successfully created

[root@s1 ~]# lvcreate -L 9G -n brick2 glustervg

Logical volume "brick2" created.

[root@s1 ~]# lvcreate -L 9G -n brick1 glustervg

Logical volume "brick1" created.

[root@s2 ~]# pvdisplay

--- Physical volume ---

PV Name /dev/sdb

VG Name glustervg

PV Size 20.00 GiB / not usable 4.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 5119

Free PE 511

Allocated PE 4608

PV UUID filZyX-wR7W-luFX-Asyn-fYA3-f7tf-q4xGyU

[...]

[root@s2 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/glustervg/brick2

LV Name brick2

VG Name glustervg

LV UUID Rx3FPi-S3ps-x3Z0-FZrU-a2tq-IxS0-4gD2YQ

LV Write Access read/write

LV Creation host, time s2, 2016-05-18 16:02:41 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:3

--- Logical volume ---

LV Path /dev/glustervg/brick1

LV Name brick1

VG Name glustervg

LV UUID P5slcZ-dC7R-iFWv-e0pY-rvyb-YrPm-FM7YuP

LV Write Access read/write

LV Creation host, time s2, 2016-05-18 16:02:43 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:4

[...]

[root@s1 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/glustervg/brick2

LV Name brick2

VG Name glustervg

LV UUID 7yC2Wl-0lCJ-b7WZ-rgy4-4BMl-mT0I-CUtiM2

LV Write Access read/write

LV Creation host, time s1, 2016-05-18 16:01:56 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Logical volume ---

LV Path /dev/glustervg/brick1

LV Name brick1

VG Name glustervg

LV UUID X6fzwM-qdRi-BNKH-63fa-q2O9-jvNw-u2geA2

LV Write Access read/write

LV Creation host, time s1, 2016-05-18 16:02:05 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:3

[...]

[root@s1 ~]# mkfs.xfs /dev/glustervg/brick1

meta-data=/dev/glustervg/brick1 isize=256 agcount=4, agsize=589824 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2359296, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@s1 ~]# mkfs.xfs /dev/glustervg/brick2

meta-data=/dev/glustervg/brick2 isize=256 agcount=4, agsize=589824 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2359296, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@s1 ~]# mkdir -p /gluster/brick{1,2}

[root@s2 ~]# mkdir -p /gluster/brick{1,2}

[root@s1 ~]# mount /dev/glustervg/brick1 /gluster/brick1 && mount /dev/glustervg/brick2 /gluster/brick2

[root@s2 ~]# mount /dev/glustervg/brick1 /gluster/brick1 && mount /dev/glustervg/brick2 /gluster/brick2

Add the following to a newline in both /etc/fstab:

/dev/mapper/glustervg-brick1 /gluster/brick1 xfs rw,relatime,seclabel,attr2,inode64,noquota 0 0

/dev/mapper/glustervg-brick2 /gluster/brick2 xfs rw,relatime,seclabel,attr2,inode64,noquota 0 0

[root@s1 etc]# systemctl start glusterd.service

Making sure:

[root@s1 etc]# ps ax|grep gluster

1010 ? Ssl 0:00 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO[root@s1 etc]# gluster peer probe s2

peer probe: success.

[root@s2 etc]# gluster peer status

Number of Peers: 1

Hostname: 192.168.183.235

Uuid: f5bdc3f3-0b43-4a83-86c1-c174594566b9

State: Peer in Cluster (Connected)

[root@s1 etc]# gluster pool list

UUID Hostname State

01cf8a70-d00f-487f-875e-9e38d4529b57 s2 Connected

f5bdc3f3-0b43-4a83-86c1-c174594566b9 localhost Connected

[root@s1 etc]# gluster volume status

No volumes present

[root@s2 etc]# gluster volume infoNo volumes present

[root@s1 etc]# mkdir /gluster/brick1/mpoint1

[root@s2 etc]# mkdir /gluster/brick1/mpoint1

[root@s1 gluster]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1

volume create: myvol1: failed: Staging failed on s2. Error: Host s1 is not in 'Peer in Cluster' state

Ooooops....

[root@s2 glusterfs]# ping s1ping: unknown host s1I forgot to check name resolution. When i fixed this and tried to create it again, i got:

[root@s1 glusterfs]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1

volume create: myvol1: failed: /gluster/brick1/mpoint1 is already part of a volume

WTF ??

[root@s1 glusterfs]# gluster volume get myvol1 all

volume get option: failed: Volume myvol1 does not exist

[root@s1 glusterfs]# gluster

gluster>

exit global help nfs-ganesha peer pool quit snapshot system:: volume

gluster> volume

add-brick bitrot delete heal inode-quota profile remove-brick set status tier

attach-tier clear-locks detach-tier help list quota replace-brick start stop top

barrier create get info log rebalance reset statedump sync

gluster> volume l

list log

gluster> volume list

No volumes present in cluster

That's odd! Hmm. I thought it'd work:

[root@s1 /]# rm /gluster/brick1/mpoint1

[root@s1 /]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1volume create: myvol1: success: please start the volume to access data

[root@s1 /]# gluster volume list

myvol1

Yep. Success. Phuhh.

[root@s1 /]# gluster volume start myvol1

volume start: myvol1: success

[root@s2 etc]# gluster volume list

myvol1

[root@s2 etc]# gluster volume status

Status of volume: myvol1

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick s1:/gluster/brick1/mpoint1 49152 0 Y 2528

Brick s2:/gluster/brick1/mpoint1 49152 0 Y 10033

NFS Server on localhost 2049 0 Y 10054

Self-heal Daemon on localhost N/A N/A Y 10061

NFS Server on 192.168.183.235 2049 0 Y 2550

Self-heal Daemon on 192.168.183.235 N/A N/A Y 2555

Task Status of Volume myvol1

------------------------------------------------------------------------------

There are no active volume tasks

[root@s1 ~]# gluster volume create myvol2 s1:/gluster/brick2/mpoint2 s2:/gluster/brick2/mpoint2 force

volume create: myvol2: success: please start the volume to access data

[root@s1 ~]# gluster volume start myvol2

volume start: myvol2: success

[root@s1 ~]# gluster volume info

Volume Name: myvol1

Type: Replicate

Volume ID: 633b765b-c630-4007-91ca-dc42714bead4

Status: Started

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: s1:/gluster/brick1/mpoint1

Brick2: s2:/gluster/brick1/mpoint1

Options Reconfigured:

performance.readdir-ahead: on

Volume Name: myvol2

Type: Distribute

Volume ID: ebfa9134-0e6a-40be-8045-5b16436b88ed

Status: Started

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: s1:/gluster/brick2/mpoint2

Brick2: s2:/gluster/brick2/mpoint2

Options Reconfigured:

performance.readdir-ahead: on

On the client:

[root@c1 ~]# wget -P /etc/yum.repos.d http://download.gluster.org/pub/gluster/glusterfs/LATEST/CentOS/glusterfs-epel.repo

[...]

[root@c1 ~]# yum -y install glusterfs glusterfs-fuse

[....]

[root@c1 ~]# mkdir /g{1,2}

[root@c1 ~]# mount.glusterfs s1:/myvol1 /g1

[root@c1 ~]# mount.glusterfs s1:/myvol2 /g2

[root@c1 ~]# mount

[...]

s1:/myvol1 on /g1 type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)

s2:/myvol2 on /g2 type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)

[root@c1 ]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 28G 1.1G 27G 4% /

devtmpfs 422M 0 422M 0% /dev

tmpfs 431M 0 431M 0% /dev/shm

tmpfs 431M 5.7M 426M 2% /run

tmpfs 431M 0 431M 0% /sys/fs/cgroup

/dev/sda1 494M 164M 331M 34% /boot

tmpfs 87M 0 87M 0% /run/user/0

s1:/myvol1 9.0G 34M 9.0G 1% /g1 [9G,9G because of replicating (aka RAID1 over network))

s2:/myvol2 18G 66M 18G 1% /g2 (9G+9G because of distributing (aka JBOD over network))

What is the difference between distributing and striping? Here are two short sniplets from glusterhacker blog:

Distribute : A distribute volume is one, in which all the data of the volume, is distributed throughout the bricks. Based on an algorithm, that takes into account the size available in each brick, the data will be stored in any one of the available bricks. [...] The default volume type is distribute, hence my myvol2 got distributed.

Stripe: A stripe volume is one, in which the data being stored in the backend is striped into units of a particular size, among the bricks. The default unit size is 128KB, but it's configurable. If we create a striped volume of stripe count 3, and then create a 300 KB file at the mount point, the first 128KB will be stored in the first sub-volume(brick in our case), the next 128KB in the second, and the remaining 56KB in the third. The number of bricks should be a multiple of the stripe count.

The very useable official howto is here.

Performance test, split brain, to be continued....

In my case the hostnames and the IPs were:

192.168.183.235 s1

192.168.183.236 s2

192.168.183.237 c1

Append these to the end of /etc/hosts to make sure that simple name resolution will work.

Execute the followings on both servers.

rpm -ivh http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm

wget -P /etc/yum.repos.d http://download.gluster.org/pub/gluster/glusterfs/3.7/3.7.5/CentOS/glusterfs-epel.repo

yum -y install glusterfs glusterfs-fuse glusterfs-server

It's no need to install any of samba packages if you don't intend to use smb.

systemctl enable glusterd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/glusterd.service to /usr/lib/systemd/system/glusterd.service.

Both servers had a second 20G capacity disk named sdb. I created two LV's for two bricks.

[root@s2 ~]# lvcreate -L 9G -n brick2 glustervg

Logical volume "brick2" created.

[root@s2 ~]# lvcreate -L 9G -n brick1 glustervg

Logical volume "brick1" created.

[root@s1 ~]# vgcreate glustervg /dev/sdb

Volume group "glustervg" successfully created

[root@s1 ~]# lvcreate -L 9G -n brick2 glustervg

Logical volume "brick2" created.

[root@s1 ~]# lvcreate -L 9G -n brick1 glustervg

Logical volume "brick1" created.

[root@s2 ~]# pvdisplay

--- Physical volume ---

PV Name /dev/sdb

VG Name glustervg

PV Size 20.00 GiB / not usable 4.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 5119

Free PE 511

Allocated PE 4608

PV UUID filZyX-wR7W-luFX-Asyn-fYA3-f7tf-q4xGyU

[...]

[root@s2 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/glustervg/brick2

LV Name brick2

VG Name glustervg

LV UUID Rx3FPi-S3ps-x3Z0-FZrU-a2tq-IxS0-4gD2YQ

LV Write Access read/write

LV Creation host, time s2, 2016-05-18 16:02:41 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:3

--- Logical volume ---

LV Path /dev/glustervg/brick1

LV Name brick1

VG Name glustervg

LV UUID P5slcZ-dC7R-iFWv-e0pY-rvyb-YrPm-FM7YuP

LV Write Access read/write

LV Creation host, time s2, 2016-05-18 16:02:43 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:4

[...]

[root@s1 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/glustervg/brick2

LV Name brick2

VG Name glustervg

LV UUID 7yC2Wl-0lCJ-b7WZ-rgy4-4BMl-mT0I-CUtiM2

LV Write Access read/write

LV Creation host, time s1, 2016-05-18 16:01:56 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Logical volume ---

LV Path /dev/glustervg/brick1

LV Name brick1

VG Name glustervg

LV UUID X6fzwM-qdRi-BNKH-63fa-q2O9-jvNw-u2geA2

LV Write Access read/write

LV Creation host, time s1, 2016-05-18 16:02:05 +0200

LV Status available

# open 0

LV Size 9.00 GiB

Current LE 2304

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:3

[...]

[root@s1 ~]# mkfs.xfs /dev/glustervg/brick1

meta-data=/dev/glustervg/brick1 isize=256 agcount=4, agsize=589824 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2359296, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@s1 ~]# mkfs.xfs /dev/glustervg/brick2

meta-data=/dev/glustervg/brick2 isize=256 agcount=4, agsize=589824 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2359296, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@s1 ~]# mkdir -p /gluster/brick{1,2}

[root@s2 ~]# mkdir -p /gluster/brick{1,2}

[root@s1 ~]# mount /dev/glustervg/brick1 /gluster/brick1 && mount /dev/glustervg/brick2 /gluster/brick2

[root@s2 ~]# mount /dev/glustervg/brick1 /gluster/brick1 && mount /dev/glustervg/brick2 /gluster/brick2

Add the following to a newline in both /etc/fstab:

/dev/mapper/glustervg-brick1 /gluster/brick1 xfs rw,relatime,seclabel,attr2,inode64,noquota 0 0

/dev/mapper/glustervg-brick2 /gluster/brick2 xfs rw,relatime,seclabel,attr2,inode64,noquota 0 0

[root@s1 etc]# systemctl start glusterd.service

Making sure:

[root@s1 etc]# ps ax|grep gluster

1010 ? Ssl 0:00 /usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO[root@s1 etc]# gluster peer probe s2

peer probe: success.

[root@s2 etc]# gluster peer status

Number of Peers: 1

Hostname: 192.168.183.235

Uuid: f5bdc3f3-0b43-4a83-86c1-c174594566b9

State: Peer in Cluster (Connected)

[root@s1 etc]# gluster pool list

UUID Hostname State

01cf8a70-d00f-487f-875e-9e38d4529b57 s2 Connected

f5bdc3f3-0b43-4a83-86c1-c174594566b9 localhost Connected

[root@s1 etc]# gluster volume status

No volumes present

[root@s2 etc]# gluster volume infoNo volumes present

[root@s1 etc]# mkdir /gluster/brick1/mpoint1

[root@s2 etc]# mkdir /gluster/brick1/mpoint1

[root@s1 gluster]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1

volume create: myvol1: failed: Staging failed on s2. Error: Host s1 is not in 'Peer in Cluster' state

Ooooops....

[root@s2 glusterfs]# ping s1ping: unknown host s1I forgot to check name resolution. When i fixed this and tried to create it again, i got:

[root@s1 glusterfs]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1

volume create: myvol1: failed: /gluster/brick1/mpoint1 is already part of a volume

WTF ??

[root@s1 glusterfs]# gluster volume get myvol1 all

volume get option: failed: Volume myvol1 does not exist

[root@s1 glusterfs]# gluster

gluster>

exit global help nfs-ganesha peer pool quit snapshot system:: volume

gluster> volume

add-brick bitrot delete heal inode-quota profile remove-brick set status tier

attach-tier clear-locks detach-tier help list quota replace-brick start stop top

barrier create get info log rebalance reset statedump sync

gluster> volume l

list log

gluster> volume list

No volumes present in cluster

That's odd! Hmm. I thought it'd work:

[root@s1 /]# rm /gluster/brick1/mpoint1

[root@s1 /]# gluster volume create myvol1 replica 2 transport tcp s1:/gluster/brick1/mpoint1 s2:/gluster/brick1/mpoint1volume create: myvol1: success: please start the volume to access data

[root@s1 /]# gluster volume list

myvol1

Yep. Success. Phuhh.

[root@s1 /]# gluster volume start myvol1

volume start: myvol1: success

[root@s2 etc]# gluster volume list

myvol1

[root@s2 etc]# gluster volume status

Status of volume: myvol1

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick s1:/gluster/brick1/mpoint1 49152 0 Y 2528

Brick s2:/gluster/brick1/mpoint1 49152 0 Y 10033

NFS Server on localhost 2049 0 Y 10054

Self-heal Daemon on localhost N/A N/A Y 10061

NFS Server on 192.168.183.235 2049 0 Y 2550

Self-heal Daemon on 192.168.183.235 N/A N/A Y 2555

Task Status of Volume myvol1

------------------------------------------------------------------------------

There are no active volume tasks

[root@s1 ~]# gluster volume create myvol2 s1:/gluster/brick2/mpoint2 s2:/gluster/brick2/mpoint2 force

volume create: myvol2: success: please start the volume to access data

[root@s1 ~]# gluster volume start myvol2

volume start: myvol2: success

[root@s1 ~]# gluster volume info

Volume Name: myvol1

Type: Replicate

Volume ID: 633b765b-c630-4007-91ca-dc42714bead4

Status: Started

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: s1:/gluster/brick1/mpoint1

Brick2: s2:/gluster/brick1/mpoint1

Options Reconfigured:

performance.readdir-ahead: on

Volume Name: myvol2

Type: Distribute

Volume ID: ebfa9134-0e6a-40be-8045-5b16436b88ed

Status: Started

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: s1:/gluster/brick2/mpoint2

Brick2: s2:/gluster/brick2/mpoint2

Options Reconfigured:

performance.readdir-ahead: on

On the client:

[root@c1 ~]# wget -P /etc/yum.repos.d http://download.gluster.org/pub/gluster/glusterfs/LATEST/CentOS/glusterfs-epel.repo

[...]

[root@c1 ~]# yum -y install glusterfs glusterfs-fuse

[....]

[root@c1 ~]# mkdir /g{1,2}

[root@c1 ~]# mount.glusterfs s1:/myvol1 /g1

[root@c1 ~]# mount.glusterfs s1:/myvol2 /g2

[root@c1 ~]# mount

[...]

s1:/myvol1 on /g1 type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)

s2:/myvol2 on /g2 type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)

[root@c1 ]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 28G 1.1G 27G 4% /

devtmpfs 422M 0 422M 0% /dev

tmpfs 431M 0 431M 0% /dev/shm

tmpfs 431M 5.7M 426M 2% /run

tmpfs 431M 0 431M 0% /sys/fs/cgroup

/dev/sda1 494M 164M 331M 34% /boot

tmpfs 87M 0 87M 0% /run/user/0

s1:/myvol1 9.0G 34M 9.0G 1% /g1 [9G,9G because of replicating (aka RAID1 over network))

s2:/myvol2 18G 66M 18G 1% /g2 (9G+9G because of distributing (aka JBOD over network))

What is the difference between distributing and striping? Here are two short sniplets from glusterhacker blog:

Distribute : A distribute volume is one, in which all the data of the volume, is distributed throughout the bricks. Based on an algorithm, that takes into account the size available in each brick, the data will be stored in any one of the available bricks. [...] The default volume type is distribute, hence my myvol2 got distributed.

Stripe: A stripe volume is one, in which the data being stored in the backend is striped into units of a particular size, among the bricks. The default unit size is 128KB, but it's configurable. If we create a striped volume of stripe count 3, and then create a 300 KB file at the mount point, the first 128KB will be stored in the first sub-volume(brick in our case), the next 128KB in the second, and the remaining 56KB in the third. The number of bricks should be a multiple of the stripe count.

The very useable official howto is here.

Performance test, split brain, to be continued....

2016. április 11., hétfő

A Mikrotik guest network can be more difficult than you may think

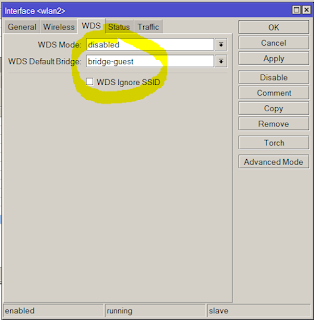

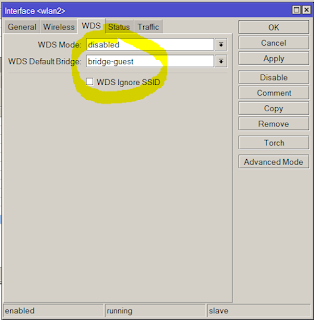

In recent RouterOS is a single click to set up a guest wifi AP. Saying guest I mean such a network that is fully or partly allowed to reach public internet but denied to reach the internal private network. Here is a simple howto about adding a second wifi AP/ slave interface. The only problem with that is it's unsecure. :( A most common way is using the QuickSet method. Everyone knows what to do seeing this window:

So if you I build a second AP like this:

it's going to use the same DHCP server as the internal WIFI. Obviously, because it's on the same bridge (switch) interface. I always wondered how they are still separeted by the RouterOS? The answer is Mikrotik's genius Layer2 firewall called Bridge filtering.

But you discover an embarassing problem if you have more IP subnets (e.g. VPN networks over pub net) and also want to accept the guest wifi filtering to them. One simply can't utilize Layer2 filtering over Layer3 routing and, of course, there is no work vice versa.

Soution: forget the built-in bridge and create a new bridge only for your guest wifi.

So far so good. Layer2 filtering is done now. But now the guests are totally separeted from your DHCP server so you need to create a new, dedicated DHCP pool for them. It requires a new address and subnet.

Lets suppose that you have such a source nating rule that nats anything that is going out to the internet:

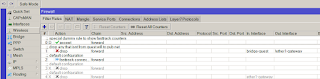

In that case we have good news. You don't have to set up any more nat rule because the guest network will hit the above rule. But it's not secured yet. The following Layer3 high priority firewall rule will take care of them:

So if you I build a second AP like this:

it's going to use the same DHCP server as the internal WIFI. Obviously, because it's on the same bridge (switch) interface. I always wondered how they are still separeted by the RouterOS? The answer is Mikrotik's genius Layer2 firewall called Bridge filtering.

But you discover an embarassing problem if you have more IP subnets (e.g. VPN networks over pub net) and also want to accept the guest wifi filtering to them. One simply can't utilize Layer2 filtering over Layer3 routing and, of course, there is no work vice versa.

Soution: forget the built-in bridge and create a new bridge only for your guest wifi.

/interface bridge add name=bridge-guestwifiAdd a new security profile for guest if you happen to still doesn't have any:

/interface wireless security-profiles add authentication-types=wpa2-psk mode=dynamic-keys name=guestwifi wpa2-pre-shared-key=topsecretpasswordAdd your new slave interface:

/interface wireless add disabled=no mac-address=D6:CA:6E:4F:54:28 master-interface=wlan1 name=wlan2 security-profile=guest ssid="For Guests" wds-default-bridge=bridge-guestwifiand link these 2 to each other.

/interface bridge port add bridge=bridge-guestwifi interface=wlan2

So far so good. Layer2 filtering is done now. But now the guests are totally separeted from your DHCP server so you need to create a new, dedicated DHCP pool for them. It requires a new address and subnet.

/ip address add address=192.168.100.1/24 interface=bridge-guest network=192.168.100.0 /ip pool add name=guest ranges=192.168.100.100-192.168.100.254 /ip dhcp-server add address-pool=guest disabled=no interface=bridge-guest name=guest /ip dhcp-server network add address=192.168.100.0/24 dns-server=192.168.100.1 gateway=192.168.100.1

Lets suppose that you have such a source nating rule that nats anything that is going out to the internet:

In that case we have good news. You don't have to set up any more nat rule because the guest network will hit the above rule. But it's not secured yet. The following Layer3 high priority firewall rule will take care of them:

/ip firewall filter add action=drop chain=forward in-interface=bridge-guestwifi out-interface=!ether1-gatewaySo from now on, guests are denied to go anywhere but the public internet.

2016. április 7., csütörtök

Ban / reject users with freeradius based on MAC addresses

Freeradius is a common tool if someone wants to set up an enterprise WiFi authentication. But if it's in a public institude, e.g. a school, sooner or later your WiFi users' passwords will leak out and after password changes your logs get full of incorrect logins from the mischievous studends. Solution: build a script that scan the logfile for incorrect logins and ban the MAC addresses of those devices. Here is a little help on how to start thinking:

add the following to your /etc/freeradius/modules/files

files rejectmac {

key = "%{Calling-Station-ID}"

usersfile = ${confdir}/rejectmacaddress.txt

compat = no

}

add the following to authorize{} section of your /etc/freeradius/sites/sites-enabled/default

rejectmac

if (ok) {

reject

}

create a new file /etc/freeradius/rejectmac.conf and add

passwd rejectmac {

filename = /etc/freeradius/rejectmacaddress.txt

delimiter = ,

format = "*Calling-Station-Id"

}

create a new file /etc/freeradius/rejectmacaddress.txt and fill it with the kiddies MACs like this

78-F8-82-F3-8F-58,B4-CE-F6-4D-74-93,B0-45-19-C6-17-D1,50-F0-D3-1D-42-CE,00-5A-05-90-08-FE,88-07-4B-D1-17-15

add this to the beginning of your radiusd.conf

$INCLUDE rejectmac.conf

restart your freeradius daemon and get ready to go home.

add the following to your /etc/freeradius/modules/files

files rejectmac {

key = "%{Calling-Station-ID}"

usersfile = ${confdir}/rejectmacaddress.txt

compat = no

}

add the following to authorize{} section of your /etc/freeradius/sites/sites-enabled/default

rejectmac

if (ok) {

reject

}

create a new file /etc/freeradius/rejectmac.conf and add

passwd rejectmac {

filename = /etc/freeradius/rejectmacaddress.txt

delimiter = ,

format = "*Calling-Station-Id"

}

create a new file /etc/freeradius/rejectmacaddress.txt and fill it with the kiddies MACs like this

78-F8-82-F3-8F-58,B4-CE-F6-4D-74-93,B0-45-19-C6-17-D1,50-F0-D3-1D-42-CE,00-5A-05-90-08-FE,88-07-4B-D1-17-15

add this to the beginning of your radiusd.conf

$INCLUDE rejectmac.conf

restart your freeradius daemon and get ready to go home.

2016. április 6., szerda

Debian Wheezy Mail Server – Postfix Dovecot Sasl MySQL PostfixAdmin and RoundCube

Shamefully I didn't want to find my own way so the whole tutorial I followed is here.

For my personal further usage, I attached the working nginx, dovecot, postfix and php5 config to this post. There are two minor differences from the original tutorial: I don't use spam filtering because at me it's done by a 3rd party provider. Second, I use an outgoing TLS smarthost via mail submission 587 port, detailed in the postfix/main.cnf.

Note that sensitive infos are all removed and in the tgz there is a missing sock, obviously, because sockets can't be packed. (tar example/php5/fpm/socks/ssl_example.com.sock: socket ignored.)

Follow the original howto first.

Versions for my pack are:

Linux box 3.2.0-4-amd64 #1 SMP Debian 3.2.73-2+deb7u3 x86_64 GNU/Linux

ii nginx 1.2.1-2.2+wheezy4 all small, powerful, scalable web/proxy server

ii nginx-common 1.2.1-2.2+wheezy4 all small, powerful, scalable web/proxy server - common files

ii nginx-full 1.2.1-2.2+wheezy4 amd64 nginx web/proxy server (standard version)

ii dovecot-common 1:2.1.7-7+deb7u1 all Transitional package for dovecot

ii dovecot-core 1:2.1.7-7+deb7u1 amd64 secure mail server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-gssapi 1:2.1.7-7+deb7u1 amd64 GSSAPI authentication support for Dovecot

ii dovecot-imapd 1:2.1.7-7+deb7u1 amd64 secure IMAP server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-ldap 1:2.1.7-7+deb7u1 amd64 LDAP support for Dovecot

ii dovecot-lmtpd 1:2.1.7-7+deb7u1 amd64 secure LMTP server for Dovecot

ii dovecot-mysql 1:2.1.7-7+deb7u1 amd64 MySQL support for Dovecot

ii dovecot-pgsql 1:2.1.7-7+deb7u1 amd64 PostgreSQL support for Dovecot

ii dovecot-pop3d 1:2.1.7-7+deb7u1 amd64 secure POP3 server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-sieve 1:2.1.7-7+deb7u1 amd64 sieve filters support for Dovecot

ii dovecot-sqlite 1:2.1.7-7+deb7u1 amd64 SQLite support for Dovecot

ii postfix 2.9.6-2 amd64 High-performance mail transport agent

ii postfix-mysql 2.9.6-2 amd64 MySQL map support for Postfix

ii php5-common 5.5.33-1~dotdeb+7.1 amd64 Common files for packages built from the php5 source

ii php5-fpm 5.5.33-1~dotdeb+7.1 amd64 server-side, HTML-embedded scripting language (FPM-CGI binary)

ii php5-imap 5.5.33-1~dotdeb+7.1 amd64 IMAP module for php5

ii php5-intl 5.5.33-1~dotdeb+7.1 amd64 internationalisation module for php5

ii php5-mcrypt 5.5.33-1~dotdeb+7.1 amd64 MCrypt module for php5

ii php5-mysql 5.5.33-1~dotdeb+7.1 amd64 MySQL module for php5

For my personal further usage, I attached the working nginx, dovecot, postfix and php5 config to this post. There are two minor differences from the original tutorial: I don't use spam filtering because at me it's done by a 3rd party provider. Second, I use an outgoing TLS smarthost via mail submission 587 port, detailed in the postfix/main.cnf.

Note that sensitive infos are all removed and in the tgz there is a missing sock, obviously, because sockets can't be packed. (tar example/php5/fpm/socks/ssl_example.com.sock: socket ignored.)

Follow the original howto first.

Versions for my pack are:

Linux box 3.2.0-4-amd64 #1 SMP Debian 3.2.73-2+deb7u3 x86_64 GNU/Linux

ii nginx 1.2.1-2.2+wheezy4 all small, powerful, scalable web/proxy server

ii nginx-common 1.2.1-2.2+wheezy4 all small, powerful, scalable web/proxy server - common files

ii nginx-full 1.2.1-2.2+wheezy4 amd64 nginx web/proxy server (standard version)

ii dovecot-common 1:2.1.7-7+deb7u1 all Transitional package for dovecot

ii dovecot-core 1:2.1.7-7+deb7u1 amd64 secure mail server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-gssapi 1:2.1.7-7+deb7u1 amd64 GSSAPI authentication support for Dovecot

ii dovecot-imapd 1:2.1.7-7+deb7u1 amd64 secure IMAP server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-ldap 1:2.1.7-7+deb7u1 amd64 LDAP support for Dovecot

ii dovecot-lmtpd 1:2.1.7-7+deb7u1 amd64 secure LMTP server for Dovecot

ii dovecot-mysql 1:2.1.7-7+deb7u1 amd64 MySQL support for Dovecot

ii dovecot-pgsql 1:2.1.7-7+deb7u1 amd64 PostgreSQL support for Dovecot

ii dovecot-pop3d 1:2.1.7-7+deb7u1 amd64 secure POP3 server that supports mbox, maildir, dbox and mdbox mailboxes

ii dovecot-sieve 1:2.1.7-7+deb7u1 amd64 sieve filters support for Dovecot

ii dovecot-sqlite 1:2.1.7-7+deb7u1 amd64 SQLite support for Dovecot

ii postfix 2.9.6-2 amd64 High-performance mail transport agent

ii postfix-mysql 2.9.6-2 amd64 MySQL map support for Postfix

ii php5-common 5.5.33-1~dotdeb+7.1 amd64 Common files for packages built from the php5 source

ii php5-fpm 5.5.33-1~dotdeb+7.1 amd64 server-side, HTML-embedded scripting language (FPM-CGI binary)

ii php5-imap 5.5.33-1~dotdeb+7.1 amd64 IMAP module for php5

ii php5-intl 5.5.33-1~dotdeb+7.1 amd64 internationalisation module for php5

ii php5-mcrypt 5.5.33-1~dotdeb+7.1 amd64 MCrypt module for php5

ii php5-mysql 5.5.33-1~dotdeb+7.1 amd64 MySQL module for php5

Feliratkozás:

Megjegyzések (Atom)